Our Research

Read about out current human-robot interaction projects below

Telepresence for SAR

We aim to better understand use cases of mobile robots that connect people with far-away environments through videoconferencing and navigation. This older topic was an extension of Prof. Fitter’s postdoctoral research.

Assistive Robots for Children

Limited options are available for young children who require motion interventions. We view assistive robots as a key opportunity for improvement in this space. This work has been funded by the NSF National Robotics Initiative (under award CMMI-2024950) and the Caplan Foundation.

Expressive Robots

Robot expressiveness, from LED light displays to motion, is important to how people perceive and interact with robots. We work to better understand these topics. This work is supported by OMIC R&D.

Robots for Older Adults

As the population of older adults rises, we are investigating ways that technology can support healthy aging in the home and wellness in skilled nursing facilities. This work is supported by the NSF (under award IIS-2112633) and NIH (under award 1R01AG078124).

Robots in the Arts

Jon the Robot, our lab’s robotic stand-up comedian, helps us study autonomous humor skills to make your future robots and virtual assistants better.

Robot Nudges

We are studying how small and low-cost socially assistive robots can encourage frequent computer users to take breaks and be more active during the workday. This work is supported by the NSF under award IIS-2441795.

Robot Policy

Everyday robots are here! We are studying how to facilitate better policymaking related to robots and how to help the general public understand the realities of robotic systems.

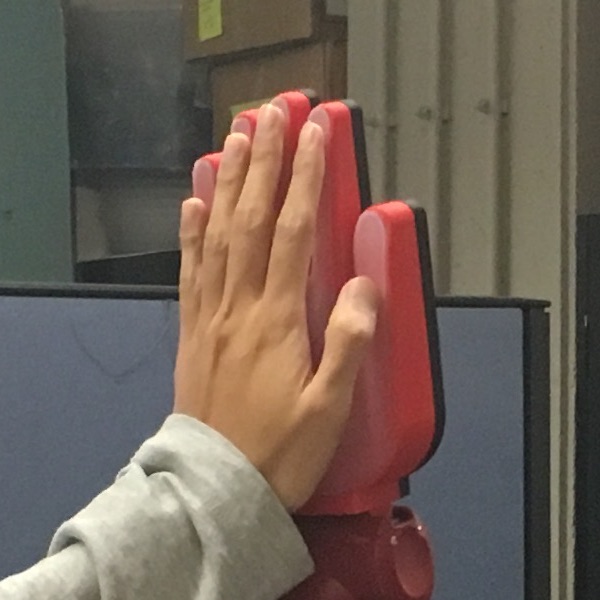

Robot Touch

Touch is an important part of human development and connection, but the touch abilities of robots is underdeveloped. We help robots feel things. This work has been funded by OMIC R&D.

Wearable Haptics

On-body systems that deliver haptic feedback have powerful potential for applications from improving navigation aids to assisting in anxiety management. This work has been funded by the OSU Advantage Accelerator.